Maîtriser la réplication des systèmes de fichiers distribués : bonnes pratiques et guide de configuration

File servers play a crucial role in distributed file system replication (DFSR) by storing files across multiple locations, ensuring efficient synchronization and accessibility. This guide explains the importance of DFSR, how it works, and step-by-step instructions to set it up on Windows servers.

Points clés à retenir

- DFSR provides efficient block-level replication, ensuring fast synchronization by transferring only changed file portions, thereby enhancing data availability and minimizing risks of data loss.

- Key components of DFSR include DFS Namespaces for centralized management and Replication Groups for determining synchronization rules, essential for streamlining file access and administration across multiple file servers. This distributed file system allows users to access files as if they were stored locally, optimizing server load and enhancing access speed through replication and a unified namespace.

- Ongoing management, monitoring, and optimization techniques such as bandwidth throttling and staging locations are critical for maintaining DFSR performance and resolving potential issues efficiently.

Contenu

- Points clés à retenir

- Understanding Distributed File System Replication

- Key Components of DFS Replication

- Setting Up DFS Replication on Windows Servers

- Managing and Monitoring DFS Replication

- Optimizing DFS Replication Performance

- Advanced Features of DFS Replication

- Comparing DFS Replication with Other Solutions

- Best Practices for DFS Replication

- Résumé

- Questions fréquemment posées

Understanding Distributed File System Replication

Is DFSR just another Windows server service? Nope. At the very least, Distributed File System Replication (DFSR) is a feature of Serveur Windows that copies folders on various servers and sites with lighting efficiency. The service supplants the aging File Replication Service (FRS) to bring a new era of folder replication for multi-master synchronization among various Windows servers.

So why is it so important? As businesses grow, their consumption of information becomes exponentially massive. Because they require the instantaneous availability of up-to-date files as their collaboration continues, DFSR keeps info up to date regardless of where it resides, minimizing lost information risk.

DFSR stands out because of its ability to replicate only the altered sections of files, rather than the whole document. This feature called block-level replication, greatly boosts replication speed besides efficacy. Picture yourself editing a sizable document – DFSR updates just the changed parts, instead of synchronizing the whole file whenever you do a slight edit. In this way it protects bandwidth and it guarantees prompt synchronization across all servers.

A key piece of DFSR is the multiple master replication engine. It has an important task in synchronizing folders across separate servers efficiently. Because it controls and replicates folder changes well, this engine is useful in settings with low bandwidth.

DFSR isn’t just replication; it means high data availability. With replication of data across multiple servers, the downtime is reduced, and data remains available for access even in the failure of a single server. This is important for most organizations but crucial for those with remote offices or distributed teams.

Replication groups learn members sharing and synchronizing specific replicated folder ensuring that files are accessible from any network device like if stored locally.

Key Components of DFS Replication

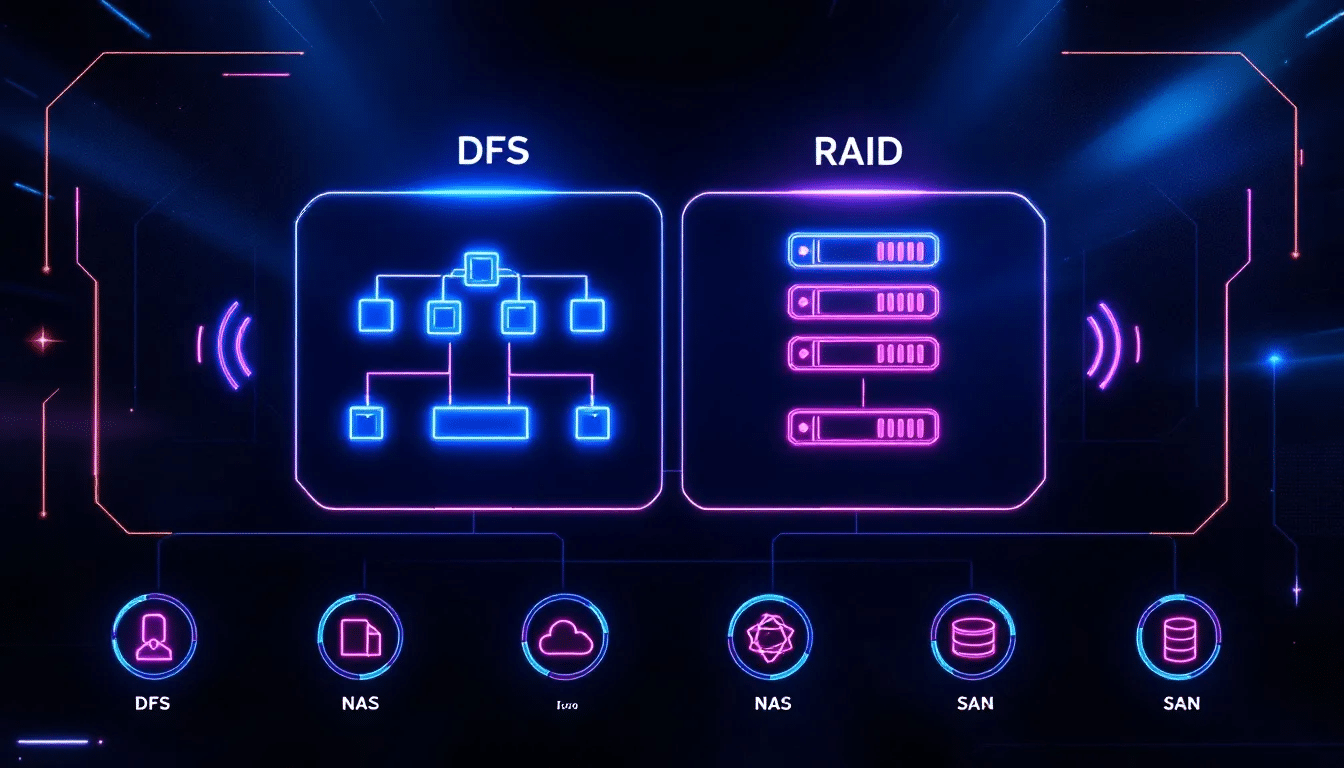

Understanding DFSR’s key components—and how they work together—is where its true power comes from. At the heart of that are DFS Namespaces and Replication Groups. Those Namespaces are essentially virtual folders that link to shared folders across multiple servers. That makes it easier to manage and access those files centrally.

Users can access files on different servers as if they were all in one place. That simplifies file management. You can do that with either a standalone DFS namespace or a domain-based one. Domain-based DFS Namespaces are particularly useful for organizing multiple PME file shares under a single folder. That gives you location transparency and redundancy—and makes data access and management across different servers in a networked domain a lot smoother.

Replication Groups are another crucial part of DFSR. They consist of at least two servers: a primary member (the source) and one or more secondary members (the targets). Within those groups, replication rules decide which files and folders are replicated, where they go, and how often. By configuring those settings, you can ensure consistent data synchronization across all your servers. That boosts availability and redundancy.

Setting Up DFS Replication on Windows Servers

Setting up DFS Replication on Windows Servers involves several steps, from preparing your environment to configuring replicated folders. Implementing DFSR across multiple locations is crucial for efficient data access and management, as it allows data to be stored and accessed from various physical sites. Following these steps optimizes the hierarchy of shared folders and streamlines administration.

The following subsections guide you through each phase of the setup process to ensure a robust DFSR system.

Preparing Your Environment

Preparing your environment is crucial before installation. Ensure all servers involved are formatted with NTFS, as DFSR does not support other file systems like ReFS or FAT. Configuring DFS in a domain environment is recommended to leverage its high availability features. Enable DFS roles on your Windows Server 2019 to utilize DFSR functionality.

To ensure a robust DFSR setup, it is important to prepare multiple file servers. Utilizing multiple file servers in a distributed file system allows for optimized server load, enhanced access speed through replication, and a unified namespace.

At least one shared folder on a domain member server is essential for setting up a DFS Namespace. This shared folder will serve as the root for your DFS Namespace, providing a foundation for the replication process.

Installing DFS Replication

Installing DFS Replication on file servers can be done via Server Manager or PowerShell. For those who prefer a graphical interface, Server Manager provides a straightforward method to add the DFSR role. However, if you prefer using PowerShell, the command for installing DFSR is Install-WindowsFeature -name ‘FS-DFS-Replication’ -IncludeManagementTools. Elevated permissions are required for this process, so ensure you have the necessary rights.

Once DFSR is installed, you can also install the DFS Namespace role using the command Install-WindowsFeature -name ‘FS-DFS-Namespace’. This will set up the necessary infrastructure for managing your DFS environment.

Configuring Replicated Folders

After installing dfs replication, the next step is to configure the replicated folders. Start by creating the namespace structure and folders you wish to replicate. Creating a domain-based namespace is advisable for better availability and management.

Adjust the local path of the shared folder if necessary, with a common example being C:DFSRootsDFS-01. Configuring replicated folders across multiple locations ensures better data availability and facilitates efficient information sharing among geographically dispersed users.

Replication groups simplify the management of multiple replicated folders, allowing you to unify settings for replication topology and bandwidth. Ensure that Read/Write permissions are granted for the shared folder on the second server to allow proper access.

Verifying the configuration includes checking the example network path for the folder shared on the second server. The purpose of staging folders in DFS replication is to serve as a cache for new and changed files before replication.

Managing and Monitoring DFS Replication

Once DFS Replication is set up, effective management and monitoring are crucial for ensuring smooth operation. Managing multiple file servers is essential for optimal DFSR performance, as it allows for better load distribution and faster access through replication.

The DFS Replication Service allows administrators to manage the replication process by enabling or disabling replication for specific files or folders and adding or removing servers as needed. This flexibility is essential for maintaining optimal performance and resolving conflicts that may arise during the replication process.

Monitoring the replication process is equally important. DFSR provides tools to quickly detect possible errors and problems, allowing administrators to take corrective action before issues escalate. Various metrics, including network latency and file sizes, can affect DFSR performance, making ongoing monitoring a key aspect of system maintenance.

Using the DFS Management Console

The DFS Management Console is a powerful tool that offers a unified interface for overseeing replication settings and statuses. This intuitive interface allows administrators to monitor and control various aspects of the DFS configuration, ensuring that the system operates efficiently.

The DFS Management Console allows you to manage replication groups, configure namespaces, and set replication schedules. This centralized management approach simplifies the administration of DFSR, making it easier to maintain a robust and reliable file replication environment.

Dépannage des problèmes courants

Despite its robustness, DFSR can encounter issues such as synchronization failures, slow speeds, and unreliability. Active Directory issues, unexpected shutdowns, and corrupted databases are common culprits that can disrupt the replication process.

To troubleshoot these issues, the dfsrdiag tool can be invaluable. This tool allows administrators to check the status of DFSR and identify potential problems. Addressing these issues promptly ensures that the replication process remains smooth and reliable.

Optimizing DFS Replication Performance

Optimizing DFS Replication performance involves understanding and managing various factors that affect the replication process. From network latency to file sizes, multiple performance indicators can influence how efficiently DFSR operates.

Monitoring these metrics helps identify potential bottlenecks and take steps to enhance performance. The multiple master replication engine plays a crucial role in optimizing DFSR performance by efficiently synchronizing folders across different servers, especially in environments with limited bandwidth.

In the following subsections, we will explore specific optimization techniques, including bandwidth throttling and the use of staging locations, to ensure that your DFSR system runs at peak efficiency.

Bandwidth Throttling

Bandwidth throttling is a crucial technique for managing DFS Replication, especially in environments with limited bandwidth network connections. Remote Differential Compression (RDC) plays a significant role in minimizing data transfer by only sending changes made to files instead of the entire file. This optimization helps in reducing the load on the network and ensures that the replication process does not interfere with other network activities.

Additionally, DFS Replication can be configured to limit bandwidth use during replication, preventing network congestion. You can set bandwidth throttling on a connection basis in the DFS Management Console, tailoring the settings to different replication scenarios. Using file filters to exclude unnecessary files and subfolders can further optimize bandwidth usage.

Staging Locations and Conflict Resolution

Staging locations are essential for the efficient operation of DFS Replication. These areas serve as a cache for new and changed files before they are replicated. Ensuring that the staging area has sufficient space is crucial, as inadequate space can hinder replication efficiency.

Conflict resolution is another critical aspect of managing DFS Replication. During replication, conflicts can lead to the creation of older versions of files, which are then stored in a designated folder. To maintain data consistency, it’s important to have proper conflict resolution strategies in place to manage these older file versions.

Advanced Features of DFS Replication

DFS Replication offers several advanced features that enhance its functionality and efficiency. These features, such as Remote Differential Compression and interoperability with virtual machines, provide significant benefits for organizations looking to configure dfs replication and optimize their file replication processes.

In the following subsections, we will delve into these advanced features, exploring how they work and the advantages they offer.

Remote Differential Compression

Remote Differential Compression (RDC) is a technology that significantly reduces the amount of data transmitted over the network during replication by identifying changes in files and only transferring the changed portions. This not only optimizes bandwidth usage but also enhances the efficiency of the multi-master replication model used by DFSR.

By minimizing data transfer, RDC plays a crucial role in managing bandwidth usage and improving replication speed.

Interoperability with Virtual Machines

DFS Replication is fully supported on Azure virtual machines, allowing organizations to leverage nuage infrastructure for their replication needs. However, implementing DFSR with Azure virtual machines requires a VPN connection to ensure secure communication between on-premises servers and Azure-hosted members in a replication group.

A VPN connection is essential for maintaining security and ensuring that the replication process operates smoothly across virtual environments. This interoperability with virtual machines provides organizations with the flexibility to implement DFSR in both on-premises and cloud-based environments.

Comparing DFS Replication with Other Solutions

While DFS Replication is a powerful tool for synchronizing files across multiple servers, it’s essential to compare it with other available solutions to make an informed decision.

For instance, Resilio offers a simplified setup process and does not have a single point of failure, enhancing overall network resilience during file replication. Resilio Connect can handle large-scale file synchronization, effectively managing over 250 million files in a single job.

Synchronisation de fichiers Azure is another alternative, providing a cloud-native service that allows on-premises file synchronization to Azure. However, it ties users to the Azure ecosystem, which may not be suitable for all organizations. Comparing these solutions helps determine which one best meets your organization’s needs.

Best Practices for DFS Replication

Implementing best practices is crucial for the successful deployment and maintenance of DFS Replication. Before deploying DFSR, ensure that all folders to be replicated are on NTFS-formatted volumes, as other formats are not supported. Updating the Active Directory Domain Services schema is also essential for effective DFS replication.

Additionally, verify that the antivirus software in use is compatible with DFSR to avoid disruptions in the replication process. By following these best practices, you can ensure that DFSR provides data availability and redundancy, maintaining user access even during server failures.

Résumé

Mastering Distributed File System Replication (DFSR) is a journey that involves understanding its components, setting up the environment, and optimizing its performance. By leveraging DFSR, organizations can ensure high data availability, minimize downtime, and enhance collaboration across multiple servers.

From the initial setup on Windows Servers to managing and troubleshooting common issues, each step plays a crucial role in maintaining an efficient and reliable replication system.

DFSR offers a robust solution for synchronizing files across distributed environments. By following the best practices and utilizing advanced features like Remote Differential Compression and interoperability with virtual machines, you can optimize your DFSR setup for better performance and reliability.

Whether you’re dealing with large-scale data or ensuring redundancy, mastering DFSR will empower your organization to manage data more effectively.

Questions fréquemment posées

What is the primary function of DFS Replication?

The primary function of DFS Replication is to synchronize folders and files across multiple Windows servers, ensuring data consistency and minimizing the risk of data loss.

What file systems are supported by DFSR?

DFSR supports NTFS-formatted volumes for file storage and sharing, but it does not support ReFS or FAT file systems.

How can bandwidth usage be optimized during DFS Replication?

To optimize bandwidth usage during DFS Replication, employ Remote Differential Compression (RDC) to transmit only modified data blocks and configure bandwidth throttling through the DFS Management Console. This approach ensures efficient data transfer and minimizes unnecessary bandwidth consumption.

What are some common issues encountered with DFSR?

Synchronization failures, slow speeds, and unreliability in DFSR are commonly linked to Active Directory issues, unexpected shutdowns, or corrupted databases. Addressing these underlying causes is crucial for improving DFSR performance and reliability.

Is DFS Replication supported on Azure virtual machines?

Yes, DFS Replication is fully supported on Azure virtual machines, provided you establish a VPN connection for secure communication with on-premises servers.